Cut Glove – new gamepad-based software

Here is the new piece of software based around an Xbox 360 controller that I’ve been working on for about a year. It is called Cut Glove and it’s a live-sampling and processing patch that contains mappings based on video game mechanisms and metaphors.

This is what it looks like:

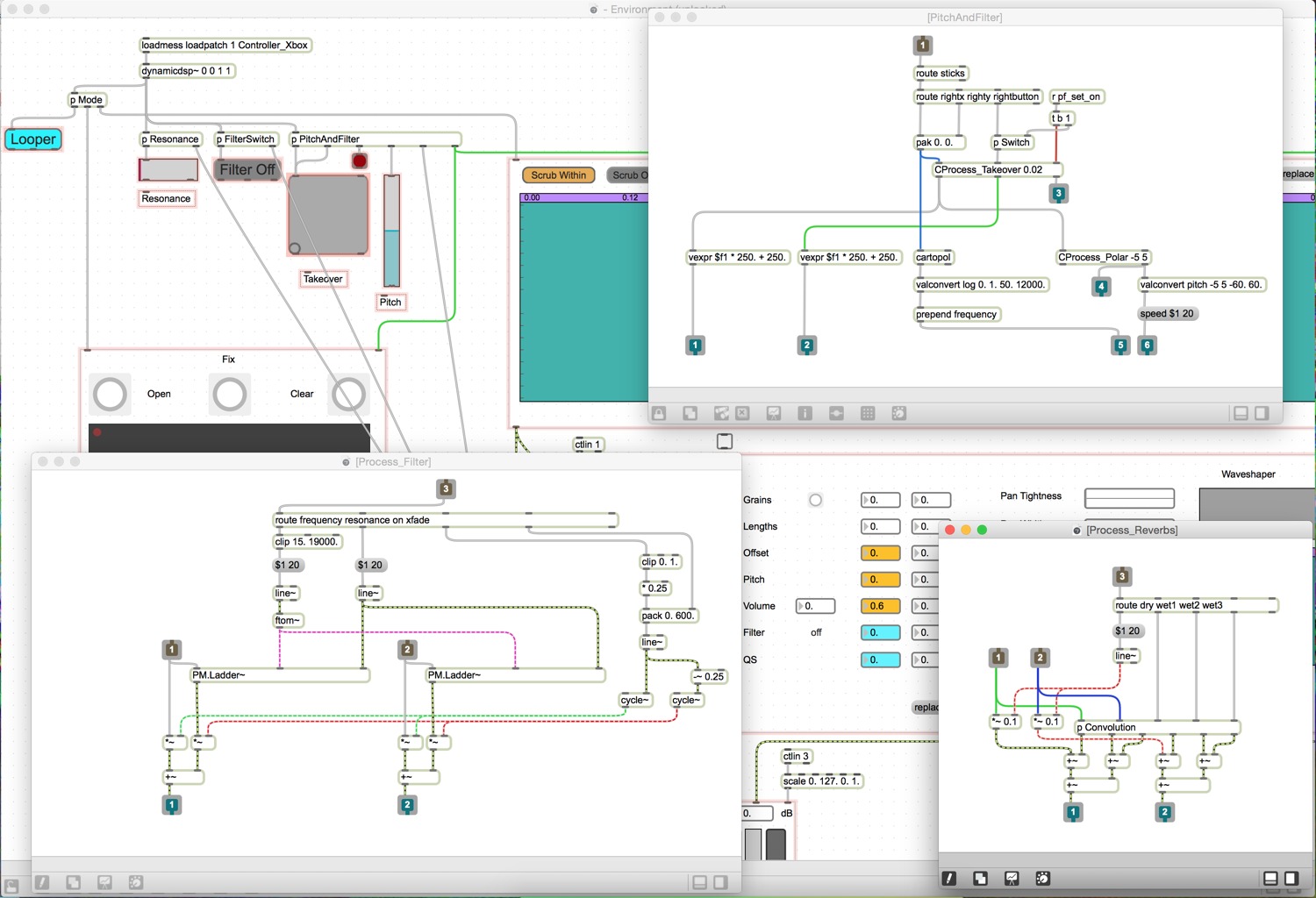

Some of the core ideas in the patch are based on sampling and processing modules I developed in The Party Van, another piece of software I’ve written. However, in Cut Glove I rebuilt everything completely from scratch, with tons of new features, more options, better overall sound, etc…. At the core of Cut Glove is karma~, a Max external I recently put out which can you read about in detail here.

Before I go into detail about what Cut Glove does, and more importantly, how the mappings are implemented, here is the first of three Cut Glove performance videos in this blog post:

You can click here to download Cut Glove(v01), the required externals, and instruction manual.

The rest of this blog post will go into detail about the background, development, and technical implementation of Cut Glove.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Table of Contents

Background

Development

Initial Research

(more) Development

About Video Games

Mapping (is the Message)

DSP (the guts)

The Future

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Background

[su_pullquote align=”right” class=“”]I realized then that I wanted to use this latent virtuosity I had developed over the years – for musical purposes. To recycle virtuosity.[/su_pullquote]

A few years ago I bought an Xbox 360. I hadn’t owned a video game system since I was much younger, but Ang and I wanted to get back into gaming. We got a few video games and after a couple months of playing I looked down at my hands (while playing UFC Undisputed 3) and was blown away with what I was seeing. My hands were executing all these complex, nested moves, and I wasn’t thinking about it at all. The way the controller is mapped to actions in that game is quite complex, with lots of simultaneous presses of buttons/bumpers/triggers, changing directions in analog, and D-pads controlling a variety of fighting moves. It got me thinking about virtuosity. I pondered on one level the physical dexterity (virtuosity) required to fluidly execute all of those physical movements, and more excitingly, the mental abstraction (virtuosity) needed to think in that nested/complex manner. I realized then that I wanted to use this latent virtuosity I had developed over the years – for musical purposes. To “recycle virtuosity”, as Pierre Alexandre Tremblay would say in this paper.

In some ways, I had already been doing similar things, for example, by applying some of my physical dexterity (and accompanying mental facility) as a multi-instrumentalist to control other sound sources, or by playing new/invented instruments. Some of this approach was originally inspired by Pierre Alexandre Tremblay‘s (PA‘s) work in concatenative synthesis (a type of granular synthesis based on audio analysis) and by our many discussions about approaching electronic instruments in an instrumental manner. That sort of thinking permeates much of what I do.

Instrumental expressivity comes into play very differently when using a gamepad because it is engineered to be ergonomic, nearly the opposite of embodiment in the performative sense of the word. The move from the Atari “broom-handle” joystick towards the more compact analog sticks we know today came from the designers wanting to overcome problems with repetitive strain injury by “turn[ing] out smaller joysticks, to be operated with the thumb” since this meant there would be “no loss in control, less force exerted, and the distance the stick has to travel before the switch is activated is shorter” (Cuciz 2002).

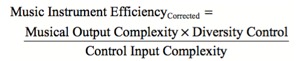

This ergonomic lack of embodiment is apparent in the Cut Glove performance videos on this page – I am literally disembodied with little physical activity being visible even in the largely scaled gamepad overview. This contrasts with the explicitly embodied designs of many digital musical instruments where embodiment and physicality seem to sometimes be at odds with functionality and expressiveness. Fels et al. argue that effort and expression are tied together, stating that “effort and the sound reinforce one another, increasing the expressivity of the performance” (Fels, Gadd, and Mulder 2001). I think in designing an instrument/interface around an ergonomic controller that a more applicable model is one proposed by Sergi Jordà in this paper, where he considers musical instrument efficiency as related to musical output complexity and control input complexity (Jordà 2005).

diagram from Sergi Jordà

Mortal Kombat has a special place in my heart – it was the first game in which I saw the button layout/mapping and thought “wow, that’s different.” The general mapping paradigm and the implementation of combos in Mortal Kombat were incredibly novel, and required a completely different way of thinking about things. Ideas like having a dedicated block button, or holding down a button for a specific move to happen, or even using “juggling” as the main mechanism for pulling off a combo – these things blew my mind. To this day I remember most of the combos and special moves for Street Fighter II and Mortal Kombat , and could likely hold my own at the arcade.

[su_pullquote align=”right” class=“”]For software instruments, the mapping in many ways IS the instrument.[/su_pullquote]

Let’s fast forward many years to when I was making my own instruments, and later software. From making and playing many instruments (both hardware and software based) I came to realize and appreciate the importance of interface, and how it maps to the gestural and sonic output of an instrument. Regardless of what the sounds are, and how they are physically (or virtually) produced, the manner in which we create them is critical. This is the case for physical instruments of course, as there are many considerations in terms of physical sonic characteristics and general ergonomics that affect how an instrument sounds and feels. For software instruments, the mapping in many ways IS the instrument.

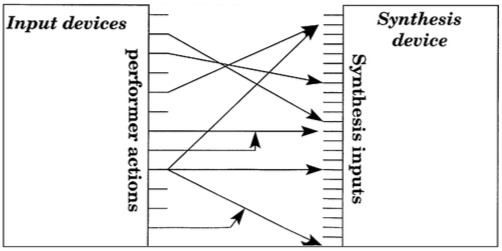

Mapping in digital instruments is a complex subject. In most software systems, mapping is implemented via MIDI-learn, where you can automatically map software parameters to a physical controller in a direct one-to-one mapping. One-to-one mapping means that a specific knob or slider on my interface would be directly mapped to a single parameter, say, the cutoff of a filter or the decay time on a reverb. With more complex setups, you can adjust the minimum, maximum, or curve of the direct mapping, or map a single physical control to multiple parameters (one-to-many). Mapping multiple physical controllers (or a complex multidimensional input data stream) to a single parameter is also possible, and is even more complex (many-to-one).

Here is a diagram from a paper by Andy Hunt and Marcelo M. Wanderley that illustrates how a variety of performer actions can be mapped to a variety of synthesis parameters, showing a combination of one-to-many and many-to-one mappings.

diagram from Andy Hunt and Marcelo M. Wanderley

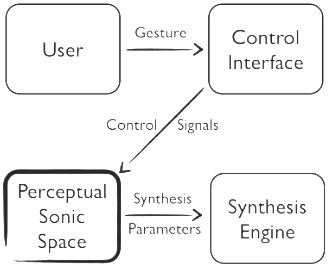

Another approach, by Stefano Fasciani and Lonce Wyse, allows for complex (non one-to-one) mappings. It does so by using analysis to determine the maximum range a set of input parameters or audio input can produce, and then automatically mapping this data to the maximum perceptual range a set of synthesis parameters, or control parameters, can produce. This type of dimensional reduction allows you to produce the widest possible variety of sounds using any given input type. Essentially, it’s an analysis-based way to get the most ‘bang for your buck,’ if bang is a super broad range of sounds, and buck is whatever input you want. You can read more about that (and see some videos here).

diagram from Stefano Fasciani and Lonce Wyse

[su_pullquote align=”right” class=“”]There’s only so much capacity to interface with something, so an instrument must be specific and limited in what it does to be generic and limitless in what it can do.[/su_pullquote]

Regarding the above examples/models, I have some reservations about the idea of having any given input mapped to as broad an output as possible, as limitations and constraints are what define most instruments. To quote myself in an interview I did for monome a couple of years ago, “There’s only so much capacity to interface with something, so an instrument must be specific and limited in what it does to be generic and limitless in what it can do.”

In addition to these mapping-specific concerns there is the general idea that expressivity is not directly tied to control or mapping. A paper by Christopher Dobrian and Daniel Koppelman talks about the importance of virtuosity in the expressiveness of a digital musical instrument and asserts that having control over something does not necessarily mean it will be used expressively. Virtuosity, they argue, is central to an instrument’s ability to be expressive. I wholeheartedly agree with this although in the context of Cut Glove expressivity comes from recycled virtuosity instead of an inherently coupled musical virtuosity.

Some more practical and interesting approaches to mapping come from two performers whose work I admire: Sam Pluta and Pierre Alexandre Tremblay. Both of them are performers AND programmers. I think that that intersection is critical, as you need to be able to see both sides of the thing you are working on in order to make musically appropriate decisions about mapping and implementation.

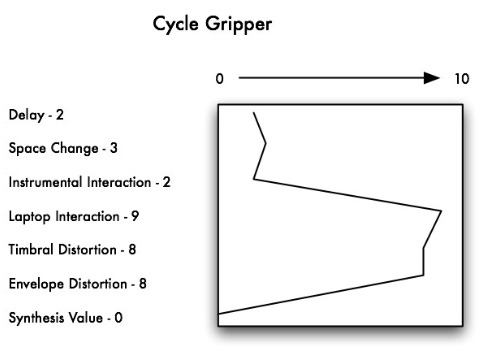

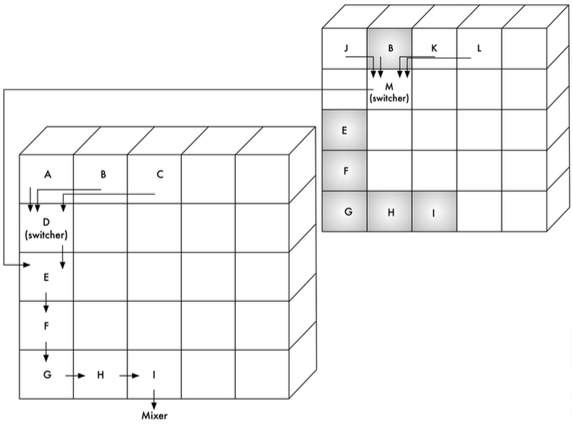

Sam Pluta‘s setup is based around a navigable matrix of processing modules that he has stratified using what he calls Sound Module Vectors. Sound Module Vectors are a way to “describe the sonic uniqueness of a sound module” by assigning certain descriptors values so that they can be compared and navigated in a performance context (Pluta 2012).

Vector for Cycle Gripper showing its descriptors. Diagram from Sam Pluta

He then creates complex combinations of these vectors as his primary (abstracted) instrumental control. He plays that dimensional space, using this abstract model of thinking. Physically, he uses a Manta controller to engage and manipulate these modules. You can read more about Sam’s setup in his thesis here.

Pierre Alexandre Tremblay‘s (PA‘s) setup is based around several Sandbox#n setups that he has created. All of these setups are also based on conceptual models of mapping that are then implemented in a variety of controllers. In PA‘s case, these controllers are an electric bass, some fader-box type controllers (more recently replaced by an iPad), and some foot controllers. In his setup, the bass itself is the centerpiece of control as well as the generator of sound. This is because he is very much a believer in the idea of recycled virtuosity. He has many decades of bass playing under his belt – so, for him, being able to apply that ingrained ability to an electronic instrument allows him a kind of control that is both nuanced and expressive.

This fluid, instrumental approach to mapping and performance was something that I wanted to focus on in the development of Cut Glove. Something that will be expanded on in the following sections.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Development

[su_pullquote align=”right” class=“”]Many of the design decisions I made when programming The Party Van dealt with the idea of creating complex and rich output from minimal user input.[/su_pullquote]

My own personal approach to software design is similar to PA’s approach, as I often use electronics while performing on another instrument. Towards that end, I developed a complex setup around monome and arc controllers in the form of The Party Van. Many of the design decisions I made when programming The Party Van dealt with the idea of creating complex and rich output from minimal user input. The Party Van makes extensive use of audio analysis to dynamically/intelligently create presets, to adapt synthesis pitches, to automatically sample/play audio, and in many other ways as well. You can read more about this on page 24 of The Party Van manual here. I wanted to be able to put systems in motion, and then to engage with them as needed but in as macro a way as possible. As such I have no discrete control over individual parameters in the mapping of The Party Van at all (other than volume).

Cut Glove incorporates much of the analysis-based control that I originally implemented in The Party Van, but its general mapping paradigm is very different. In The Party Van the primary mode of input is that of button presses; The Party Van is largely a world of on/offs. Cut Glove is primarily about continuous control; a world of gestures and wiggles.

[su_pullquote align=”right” class=“”]The Xbox 360 controller is cheap, readily available, and well made.[/su_pullquote]

An aspect of Cut Glove that is not lost on me is that of accessibility. I love monome controllers for their simplicity and openness as well as their general design and sustainability, but they are not the cheapest controllers available. The Xbox 360 controller is cheap, readily available, and well made. It also feels really good in the hands, is easy to interface with Max (via the [hi] object), and produces 16-bit analog control data (which is better than most other continuous controllers – most MIDI controllers only offer 7-bits of resolution).

Evan-Amox. Black Xbox 360 S wireless controller. 14 June 2015. wikipedia.

[su_pullquote align=”right” class=“”]I wanted to consider all of these things and not just treat the gamepad like the near arbitrary collection of buttons and continuous controllers.[/su_pullquote]

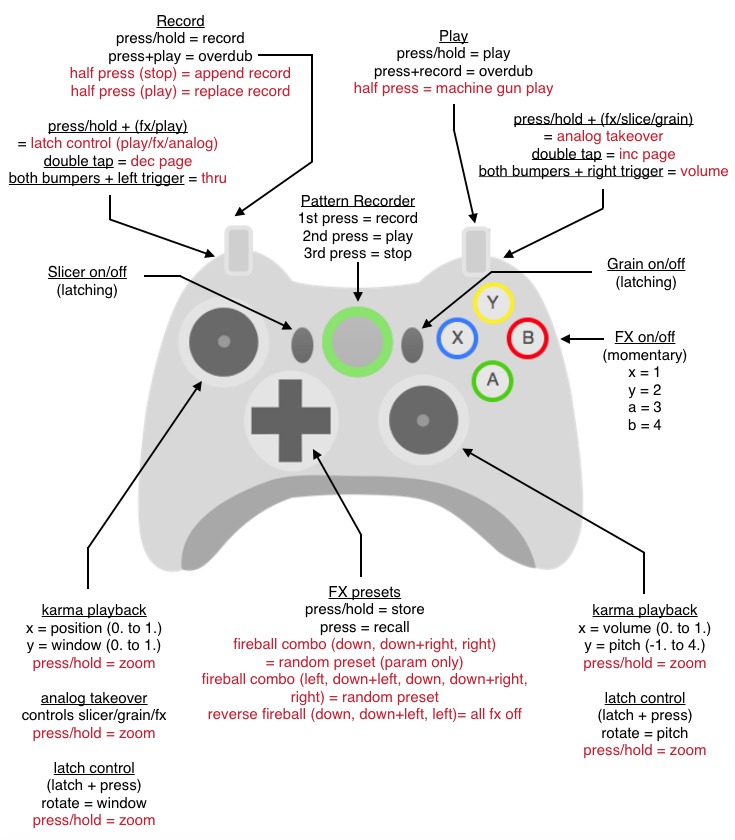

I spent a long time thinking about how best to approach the mapping using the Xbox controller. The ergonomics of the controller imply a certain kind of behavior – or at least hierarchy. For example, you can’t access the x/y/a/b buttons AND the right analog control at the same time (easily), and the triggers invite more play than the bumper buttons. There are also two analog controllers, each giving 16-bit resolution in each axis. The triggers are continuous controls as well, but only 8-bit. I wanted to consider all of these things and not just treat the gamepad like the near arbitrary collection of buttons and continuous controllers.

The answer, for me, came in approaching it like video game mapping. I wanted to avoid having flat, or unidimensional, controls, where each control serves only one function. That being said, I did lay out a conceptual hierarchy for the kinds of things I wanted to control and the manner in which I wanted to control them.

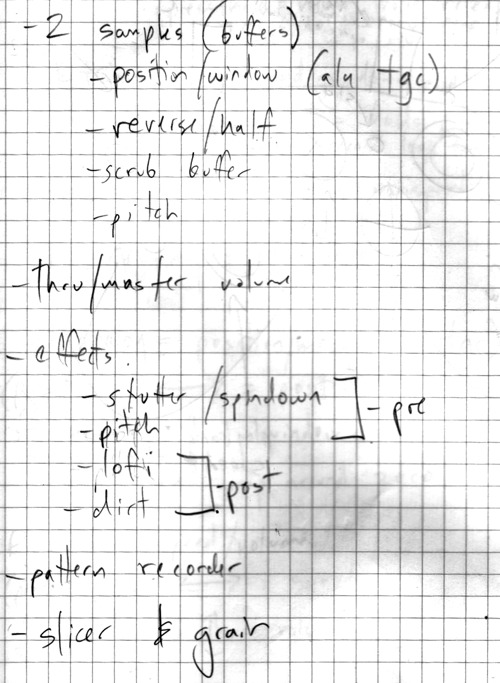

This is the initial rough sketch I made for how I wanted to map things, along with the first page of the individual sketches.

This was the basis that I then built on to include nested controls, special moves, and other video-game specific mechanisms.

Here is a condensed view of the overall mapping in Cut Glove. (things in red represent more complex/nested controls)

[su_pullquote align=”right” class=“”]I wanted to break down the mechanisms used in video games into a kind of grammar and syntax, and then explore these ideas via novel gameplay metaphors.[/su_pullquote]

One of the first people I spoke to about the idea was Alex Harker, since he had worked on gamepad stuff for several years. We met up, I talked to him about the idea, and we played video games. I showed him some of my favorites, in terms of gameplay/concept as well as novel mapping ideas. At the time I was taking a more academic approach, and had the intention of publishing a paper about the idea, ideally with Alex and PA on board. My idea was centered around Metaphors and Mechanisms. I wanted to break down the mechanisms used in video games into a kind of grammar and syntax, and then explore these ideas via novel gameplay metaphors.

For largely philosophical/political reasons I abandoned the idea of doing this as an academic paper, and instead focused on a practice-based approach, knowing that the same research would end up in a blog post. (You can click here to read a blog post about openness and sharing which relates to this decision to not pursue traditional academic publishing.)

The next section is a rough outline of what I had worked out and originally wanted to incorporate into an academic paper. Later in this blog I will return to many of the ideas presented here (specifically the Braid related ones).

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Initial Research

This section contains the my original sketches and ideas for the game metaphors (gameplay and design) and mechanisms (control and mapping).

Metaphors:

Braid

- Rewind at any point (use mapping/gesture generation as a constant stream that you can ‘scrub’ through)

- Time compression/expansion (go into slow-mo mode to create hyper detailed gestural data ‘out of time,’ which will then happen at normal speed ‘in time’)

- Ghost/repeating gestures (be able to layer multiple levels/dimensions of gestural data (which itself can be time remapped) for instant deployment)

Fez

- 2d to 3d compression/reorientation/recontextualization

Halo & Call of Duty

- Navigation through a (descriptor) space in flattened 3d, or real 3d

SSX & Tony Hawk

- Multiple/nested sequence/combinations, dual analog ‘slow-mo’ combo

Dragon Age

- Modifier + analog for rotation/complex menus

- Modifier brings 4 new buttons (A/B/X/Y) into ‘focus’

Mechanisms:

Event generation

Mapping Hierarchy

- Events (either presses, gestures (circle/semicircle), multiple simultaneous presses, double tap, press/hold)

- Modifiers (multiple level/depths)

- Sequences (Combos) (up-up-down-down-left-right-etc…)

Gestures

- Can be independent (circle, flick, semi-circle)

- Context specific (in certain modes, gestures are enabled)

Grammar

- Can have gestural grammar (punctuation). A press at the start, middle, or end (à la Street Fighter fireball, or Mortal Kombat enhanced moves)

Gesture stream

- Multidimensional fusion (2x analog and/or 2x triggers)

- Spatial navigation

Feedback

- Access LEDs via driver?

- Rumble on valid ‘move’

- Vibration as state feedback (i.e. grain density, low frequency transposition, overall roughness/brightness)

Taxonomy:

Event

- Presses, gestures (circle/semicircle), simultaneous presses, double tap, press/hold

Modifier

- Single key press that remaps other buttons

- Can be multiple depth layers

Gesture

- Circle, semicircle, flick

Combo

- Sequence of events, gestures, with or without modifiers

Grammar

- Gestural syntax and timing. A modifier happening at the start (UFC) or at the end (Street Fighter)

Tools:

Components

- Adapted driver for LED/rumble feedback

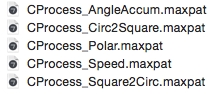

- Circle-to-square analog pad (CProcess abstractions)

- Syntax to create mappings via text (“F, D, B, A+B”)

Training Mode

- You practice/validate gestures/sequences/combinations with realtime (visual?) feedback

- Have beginner/intermediate/advanced modes and/or a slider which tightens up tolerance/latency as you improve at the gestures

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

(more) Development

[su_pullquote align=”right” class=“”]I would be applying a similar idea, but applying a more conceptual model of metaphor rather than a physically bound one.[/su_pullquote]

The idea of using metaphors as a mapping mechanism is nothing new. This paper by Sidney Fels, Ashley Gadd, and Axel Mulder talks about using metaphors (physical and conceptual) to increase general instrumental expressivity. A metaphor they discuss in the paper is that of virtual sound sculpting using your hands. As such, by moving your hands around, you are controlling synthesis parameters. One can see the attraction here. By using a conceptual model (metaphor) one can map in manners more complex than direct one-to-one mappings (which can tend to produce boring results) and still retain a high level of control. I would be applying a similar idea, but applying a more conceptual model of metaphor rather than a physically bound one.

I spoke to Alex Harker about this idea and he gave some valuable insight, which ended up in the metaphors and mechanisms presented in the previous section. In addition to helping me refine the conceptual side of what I wanted to do, he was incredibly helpful and generous with some of the tools he had built for a gamepad controlled performance patch. At the time Alex had been using a gamepad-based patch that allowed for complex scrubbing through a prerecorded buffer as well as 2-d navigation of a multidimensional descriptor space.

After I turned Alex on to the Xbox 360 controller, he had to adapt his patch to the way the analog controls worked in an Xbox controller. The main difference was that the Xbox controller had a round area for the analog sticks to move around in, whereas the Logitech one he was using before had a square area, with corners. In order to resolve this, as well as other analog stick related issues, he programmed a set of abstractions (CProcess) which I use quite extensively in Cut Glove. These abstractions do some low level but very useful things, like map circle analog spaces to square ones, calculate the rate of speed of the analog stick, and allow you to make concentric circles with the gamepad to incrementally modify a parameter.

Armed with these abstractions for the control of the analog sticks I was able to implement some of the gamepad specific mechanisms and secondary mapping layers discussed in the following sections.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

About Video Games

[su_pullquote align=”right” class=“”]I really appreciated how different these games were, and more so, how far they went in differing directions.[/su_pullquote]

Growing up playing video games I came to appreciate all manners of video games. I spent hundreds of hours on Super Mario Bros., Castlevania, Contra, and other old school NES titles. Zelda and Metroid really stood out for me. There was an epic, almost magical quality to them. While playing them I would be filled with a profound feeling – due to the heavy sense of mood, evocative music, and incredibly deep gameplay. During this era of video games, genres had not yet been codified, so games all seemed to come from radically different places. I really appreciated how different these games were, and more so, how far they went in differing directions.

I later got into playing first person shooters (Halo specifically). This was my first introduction to the dual-analog controller paradigm that’s more or less the standard now for video game consoles. It was very difficult at first – trying to navigate a 3d game space while having decoupled control over avatar direction of movement and direction of aim – but eventually that became second nature through the “altering [of my] visual attentional processing” (Green and Bavelier 2003). That internalized sense of control, movement and mapping eventually became central to how I wanted to approach mapping in Cut Glove.

[su_pullquote align=”right” class=“”]This was the product of incredibly creative thinking coupled with virtuoso dexterity.[/su_pullquote]

I also got into playing real-time strategy games (especially StarCraft and WarCraft III). In these games there are several races that have complexly intertwined relationships and playing paradigms. In StarCraft, the Zerg specialize in having swarms of weak/disposable units, while the Protoss are nearly the opposite, having fewer but more powerful units. Each race requires a different mindset while playing and has certain strengths and weaknesses that need to be considered when combating other races. I started watching tons of StarCraft gameplay videos on Youtube, at first to figure out how to best approach a particularly difficult level, and then later to enjoy the sheer expertise displayed. A level that would take me over 45 minutes to beat could be defeated in minutes using some really clever gameplay. This was the product of incredibly creative thinking coupled with virtuoso dexterity.

Terrans fighting the Zerg

Through StarCraft I was exposed to the world of competitive video gaming. Not in the ‘playing Mortal Kombat at the arcade’ sense but in the ‘sponsored professional cyber athlete competing in an arena full of spectators’ kind of way. In South Korea you can have a career as a professional StarCraft athlete, straight up. Aside from the fascinating sociological aspects of that, I was interested in the staggering efforts put into training and preparation. There is a metric that is used in this world: actions per minute (APM). APM refers to the total number of actions that a player can perform in a minute. A professional StarCraft player would typically have an APM between 300-400 (an action every 150ms), which could spike up to 800, the equivalent of typing 160 words per minute. To put that into perspective, that is so fast that the rate of activity on the mouse and keyboard could almost be heard as a pitch.

[su_pullquote align=”right” class=“”]It’s all about near superhuman rates of action and thought: pure athleticism, but as applied to a virtual activity.[/su_pullquote]

The idea here is that you maintain an incredibly high granularity of action (and its associated thinking), often with redundant moves/commands, so that when you issue new commands you can slide into this super-stream of physical and mental activity. It’s all about near superhuman rates of action and thought: pure athleticism, but as applied to a virtual activity.

More recently I’ve gotten into games that force you to think in a completely different way. The most profound of these games has been Braid. Braid is the product of one developer, Jonathan Blow. The game is astonishing in many respects, not the least of which being its unique gameplay mechanisms based on the manipulation of time. Beyond these time manipulation-based gameplay mechanisms, the way the non-linear story telling is woven together with genuinely challenging puzzles, all the while being set to a beautiful art style, is extraordinary. I highly recommend watching this interview with Jonathan Blow, where he talks about the idea of entertainment and what it means in the context of video games.

opening screen of Braid

[su_pullquote align=”right” class=“”]After playing Braid for a couple of days, your real-world sense of time becomes distorted.[/su_pullquote]

Most of the game mechanics in Braid deal with the treatment of time in an unusual way. Throughout the game you can rewind time at any point. The initial introduction to this is that when you die, you simply rewind time until you are no longer dead. This gets pushed further and further, until you are forced to manipulate this flow of time in order to pass more complex levels and puzzles. In some cases, you have to quickly rewind/resume time in order to hover in the air until a time-immune cloud arrives under your feet. At other points in the game, you have multiple simultaneous timelines where you go flip a lever, rewind time, and do another activity while a branched off version of yourself does the activity that you had done before rewinding. After playing Braid for a couple of days, one’s real-world sense of time becomes distorted.

The game is not only challenging and incredibly satisfying to play, it also forces you to look at the world in a different way. The non-linear aspects of Braid are present in every aspect of the game. When you begin the game, you start in World 2 and carry on from there. I won’t get into the story here so as to not spoil anything, but I will say the game’s overall story arc also ties into this manipulation of time in a beautiful and poetic way. If you have played the game and want to see some interesting meta-time manipulations, check out Dan Tramte’s page on Braid.

[su_pullquote align=”right” class=“”]In Fez, you play as a 2d sprite character that becomes aware of, and can navigate through, a 3d world.[/su_pullquote]

Fez is another indie game that, in addition to being an engaging gaming experience, contains some unique and complex game mechanics. In Fez you play as Gomez, a 2d sprite character who, through a glitch in the game world (which causes the game to crash and reboot), becomes aware of a third dimension and gains the ability to navigate the 3d version of his 2d world. So, at any point during the game you can rotate between four 2d views of a 3d world, which are re-flattened each time you rotate – meaning that each 2d representation of the 3d world has a different layout and perspective. Things that are far away in one view can be close in another, and things behind objects become revealed as you rotate perspectives. Throughout Fez you navigate the (3d) world this way, constantly needing to reorient your view on that 3d world in order to advance through the game.

I don’t think it is a coincidence that most of the games I’ve highlighted in this section are made by indie developers. The gameplay mechanisms and general genre-shattering approaches used are something that is unlikely to come out of a commercial game development firm. It’s a safer bet to develop a new Call of Duty game than it is to take a chance on an oddball game like Braid or Fez. There are obvious parallels here to my thoughts on independent thinking in creative music making.

Indie Game: The Movie is a documentary on indie game developers (including Jonathan Blow (Braid) and Phil Fish (Fez)) that gives valuable insight into what they do (and why they do it). In the documentary the developers detail how difficult it was to develop these games in such a stagnant industry.

[su_pullquote align=”right” class=“”]You practice in order to be able to reduce the physiological friction between thinking about doing something and that something happening.[/su_pullquote]

Playing video games requires a mental and physical dexterity which is not dissimilar to instrumental performance technique. This is obviously the case in games like Guitar Hero where the games are designed “in exactly this way to create new modes of musicality” as put by Kiri Miller in this paper. You practice in order to be able to reduce the physiological friction between thinking about doing something and that something happening. Anyone who has put their “10,000 hours“ into gaming has a wealth of tappable potential to explore.

Tying back to the idea of using mapping metaphors to extend the expressivity and musicality of a software-based instrument – one can see how these gameplay approaches and mechanics are rich with musical and mapping implications.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Mapping (is the Message)

General Mapping

[su_pullquote align=”right” class=“”]My initial considerations when coming up with the mapping in Cut Glove were, fundamentally, what I wanted to control, and how I wanted to control it.[/su_pullquote]

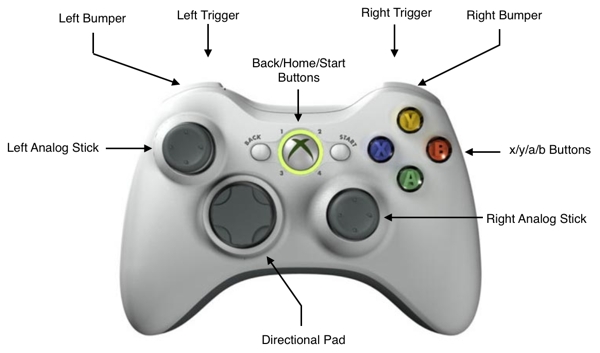

My initial considerations when coming up with the mapping in Cut Glove were, fundamentally, what I wanted to control, and how I wanted to control it. The Xbox 360 controller has two analog sticks, two analog triggers, 11 buttons, and a directional pad. The analog sticks are high resolution (16-bit), allowing me four axes of high resolution continuous control. In addition to the analog sticks’ high resolution, their compact size and ergonomic placement makes them ideal for gestural control and spacial movement. The variety of push-buttons lend themselves to on/off type functionality, such as enabling and disabling certain sections of a patch. The triggers and bumpers are accessible by different parts of the hand (index fingers), allowing for completely independent areas of physical control. And finally there are the D-pad’s four buttons, with their intrinsic directional mapping possibilities. This represents the flat surface of mappings–the collection of discrete controls.

You can see that the controller is almost a mirror image of itself, along the vertical axis. The left analog stick and x/y/a/b buttons occupy the same high thumb space, and the D-pad and right analog stick occupy the same low thumb space. These high and low spaces, ergonomically speaking, are mutually exclusive. You cannot easily hit the x/y/a/b buttons AND control the right analog stick at the same time. The middle three buttons (back/home/start) are within reach of both thumbs, but are not easy to engage accidentally. Along the top and back are left and right bumpers and triggers, all of which are ergonomically mutually exclusive.

So in the controller itself we have a combination of continuous and binary controllers arranged into four surface subsections (right thumb, right index, left thumb, left index). Each subsection has mutually exclusive access to both continuous and binary controls, so any finger touching the controller can engage in either type of control.

[su_pullquote align=”right” class=“”]The control and mapping hierarchy I wanted to establish would allow for maximum gestural control and playability on this flat mapping plane.[/su_pullquote]

The control and mapping hierarchy I wanted to establish would allow for maximum gestural control and playability on this flat mapping plane. Before getting into the nested and video game-specific mappings, I wanted to have something musically useful. In fact, this flat mapping accounts for 90% of my playing in the Cut Glove videos on this page.

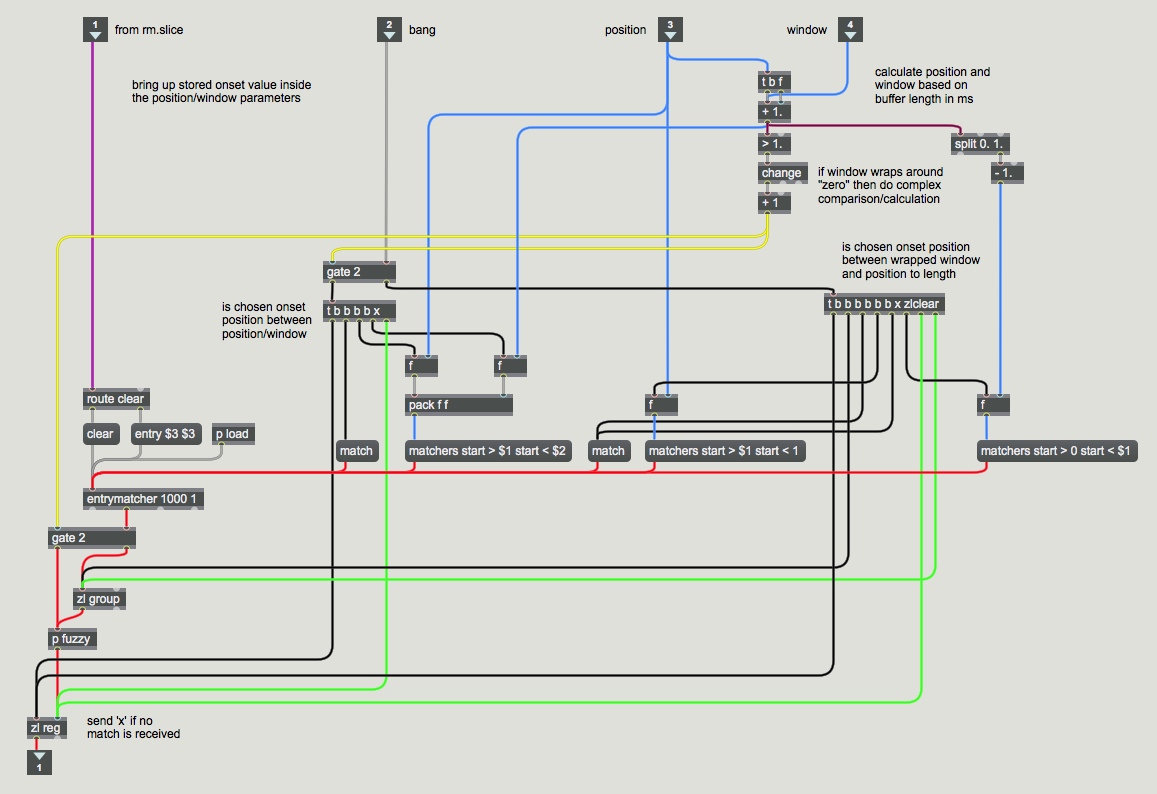

My initial sketch for what I wanted to control focused on having dynamic control over which segment of a buffer to play back, along with control over its pitch and volume. I then wanted four effects (of the eight total in The Party Van): gesture/pattern recording and the slicer/grain methods of buffer playback. That, more or less, became mapped onto this flat surface. Buffer playback position and window parameters were mapped to the left analog stick, and pitch and volume were mapped to the right analog stick. This gave me high-resolution gestural control over the temporal buffer space in terms of what is played, and of how fast/loud it is. The effects (gesture/pattern recording and slicer/grain) were mapped onto the rest of the front facing buttons.

initial sketch of desired feature set

I showed some of my early mapping experiments to Sam Andreae while we were taking a break from rehearsing. Sam and I often play video games together, so he knows many of the video games I was basing the mapping ideas on. In addition to that, he is a brilliant performer who uses electronics in his setup, so he is in a unique position to understand the ‘whats’ and ‘whys’ of what I was doing. Sam gave me a lot of great feedback, much of which ended up in Cut Glove. One particularly important consideration he brought up in these early sessions was the idea that in order to really make the mapping feel like an instrument the patch shouldn’t do anything if you’re not touching the controls. My initial mapping had more controls that remained turned on after a button was pressed (latching), and a less neutral start state, which created a weird combination of allowing functions to be engaged while not in use, and having impractical parameter settings for these controls.

[su_pullquote align=”right” class=“”]The fundamental idea of treating the control and mapping like an instrument – this is something I wanted adhere to as much as possible.[/su_pullquote]

The fundamental idea of treating the control and mapping like an instrument – this is something I wanted adhere to as much as possible. This desire for an instrument-al nature is also the reason why I designed Cut Glove for use without a computer screen. This design paradigm is something that I carried over from my hardware-based sampling days, and later implemented in The Party Van. The computer was there to handle all of the heavy lifting, but it was not to be used to input, display, or edit information during a performance. When I perform, my laptop screen is black and the laptop is half closed. I have found that this small decision refocuses my attention to my ears and my instrument.

This proved to be difficult to implement with such a complex instrument/mapping. Even in the most complicated of video game mappings, you have instant visual, aural, and often haptic feedback as to whether or not the move, combo, or gesture you executed was successful. Such feedback is crucial. Initially, the effect modules were set up so that one press would turn the effect on, and another press would turn it off. That meant that at any given point, there was no way to tell what effect was on or off – other than the sounds you heard. This could be especially difficult when there were other processes in use that could drastically manipulate the sounds. All of which made for a complex memory game of trying to remember what was on, on top of all the other musical considerations.

The longer term goals, outlined later in this post, are to incorporate visual and haptic feedback into the controller itself. The Xbox 360 controller has built-in LEDs and rumble motors, which are generally taken advantage of in video games. For the interim I thought about designing a large performance mode display to use in a live context. But I decided against doing so at this stage so as to not develop a dependency that I would later be unable to shake. At the moment my general approach is to practice using the system without a screen – as one would do with any other instrument – in order to overcome this initial lack of feedback.

[su_pullquote align=”right” class=“”]So removing that visual dependency allows for a fuller, more nuanced understanding of the underlying systems.[/su_pullquote]

In addition to the improved mental and physical navigation of the mapping one acquires through repeated use, I have found that not looking at the display can improve how you navigate the software. With non-linear processing systems, and even more so with complex audio analysis-based manipulation, any kind of interface serves as a dimensionally – and conceptually – reduced space. This multidimensional and metaphorically bound mapping cannot be accurately represented visually. So removing that visual dependency allows for a fuller, more nuanced understanding of the underlying systems, similar to how a memorized performance is generally better than one being read. This is easily demonstrated by navigating a buffer filled with known material using the position/window controls (left analog stick) with and without the user interface (UI). I have found that the abstracted mental version of the contents of the buffer is much easier to navigate than the visually driven one (which involves a visual processing lag).

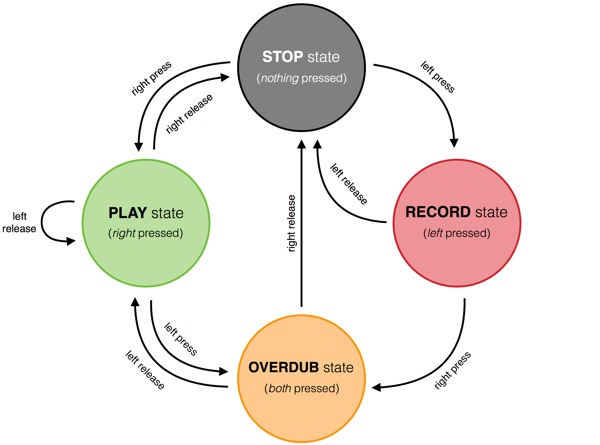

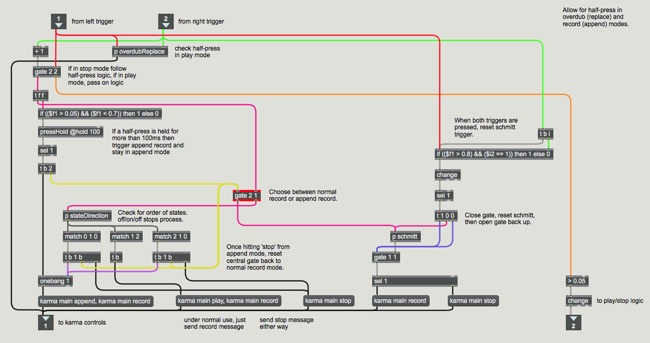

Play/Record/Overdub Mapping

I mapped the left and right triggers to record and play because of their physical placement, as well as my ingrained association with the buttons doing something in games like Halo (firing weapons). Pressing the left trigger will start recording into a buffer and pressing the right trigger will play from a buffer. I’ve implemented my adaptation of the state machine from the Line 6 DL-4 looper to handle what happens when you press both triggers at once, or when you are holding one and press the other. I had implemented a similar state machine in The Party Van, but the typical press+hold usage in Cut Glove required additional state transition considerations.

The basic state machine works as follows:

[su_pullquote align=”right” class=“”]These half-press functions serve to create non-linear loops in which the material is not heard back in the order it was originally recorded.[/su_pullquote]

This mapping allows a dynamic and robust set of behaviors with only two buttons. In addition to this binary mapping, I’ve implemented functionality based on trigger half-presses, drawing on the continuous nature of the trigger controls. In order to keep the normal play/record functions responsive the trigger must be in a half-pressed state for at least 20ms, ruling out fast press/releases. The left analog trigger serves two additional record functions. The first function engages replace overdub mode (internally setting karma~‘s overdub parameter to zero), when the right trigger is already being pressed. This allows for very fast replacement of material in the buffer with new incoming material, creating a real-time, non-linear, glitch-style looping originally inspired by some of the Echoplex Digital Pro looping work of Andre LaFosse. The second function mapped to the half-press of the left trigger is to append audio into the existing loop. This is another karma~ feature that allows you to add new material to the end of an already defined loop. Both of these half-press functions create non-linear loops in which the material is not heard back in the order it was originally recorded.

[su_pullquote align=”right” class=“”]This allows a fluid navigation of this stuttery sound world.[/su_pullquote]

The right trigger uses a half-press to engage machine gun-like, rapid fire, playback. Unlike the left trigger’s half-press which only uses the middle range of the trigger (between 0.05 and 0.7) to engage replace/append recordings, the right trigger uses the full continuous range of the trigger to increase the speed of the rapid fire playback. As you initially squeeze the right trigger, the audio playback will start repeating at a rate of 600ms, increasing up to a rate of 30ms near the end of the trigger’s range (0.95). The code is implemented in a manner that updates the repeating playback rate quickly without needing to wait for a full timing cycle, allowing a fluid navigation of this stuttery sound world. Because of how the mapping is implemented, it is possible to go from normal playback to a very fast stuttering sound by simply releasing the right trigger slightly.

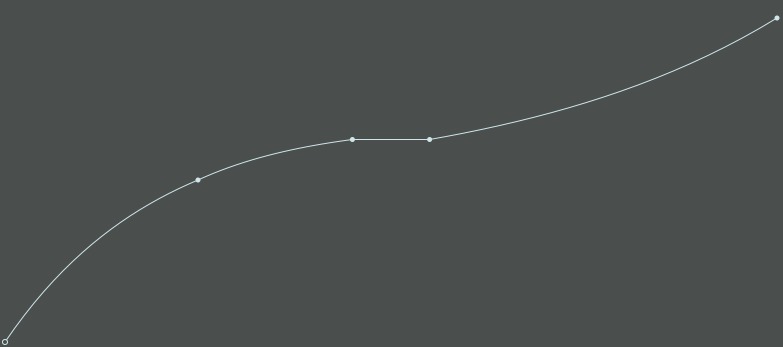

Analog Stick Mapping

After the left and right triggers, the most important mappings are those of the dual analog sticks. The left analog stick is mapped to buffer position and window, and the right analog stick controls the pitch and volume of playback. The right analog stick mapping contains some of the most fine-tuned mapping in all of Cut Glove, and it went through various revisions during the development process. I initially planned for the pitch (playback speed) mapping to go from -4.0 at the bottom of the y axis and up to 4.0 at the top of the y axis, with 0.0 playback speed in the middle of the analog stick’s range. This was abandoned after my initial meeting with Sam when he suggested that the center of the analog stick’s range should be regular playback speed (1.0). After some discussion we settled on the idea that the range would be broken into three discrete sections. The center of the range to the top would go from 1.0 to 4.0 playback speed, allowing for regular playback speed in the neutral state. I divided the bottom half of the range into two sections. The center of the range to a quarter of the way down would go from 1.0 to 0.0 playback speed, and the bottom quarter of the range would go from 0.0 to -4.0 playback speed. This allowed for fine control at near zero playback speed as well as control of extreme negative playback speeds. These points were then given non-linear curves, to allow for smoother movement through that parameter range.

The flat area in the middle of the graph above represents a dead zone where the playback remains at 1.0 even if the controller is not exactly in the middle of its range. This creates a built in slop to allow for rapid gestural movement with the right analog stick with the confidence that when you release the stick it will return to 1.0 playback speed.

[su_pullquote align=”right” class=“”]This limits my reverse playback speed, but allows for a more perceptually broad gesture space with the right analog.[/su_pullquote]

The pitch/speed mapping of the analog sticks stayed this way long into the development process, but was revised before finalizing Cut Glove when I realized that, in context, -4.0 playback speed sounds very similar to +4.0 playback speed. Meaning that if I am making extreme gestures with the right analog stick, the perceived effect is only half of its useful range, creating an unsigned version of a signed parameter range. Other than small reverse-sounding artifacts, both halves of the right analog stick’s y axis sounded exactly the same. Subsequently I shrank the range at the bottom of the mapping so it would only go as far down as -1.0 playback speed. This limits the maximum reverse playback speed, but allows for more perceptually broad gestural control.

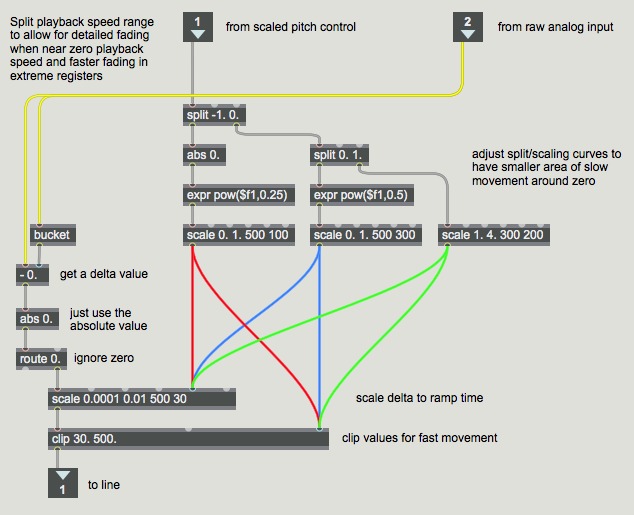

In addition to fine-tuning the range and curves for the pitch control, I spent a long time fine-tuning the adaptive smoothing that is applied to the pitch parameter of karma~’s playback. This proved to be critical in creating reactive and buttery-smooth control over playback speed. My initial approach to adaptive smoothing was based solely on the -1.0 to 4.0 playback speed parameter. This proved to be inadequate for two reasons. Firstly, the analog stick’s range was artificially divided into a large parameter range (from -1.0 to 4.0) and secondly it was non-linear by design. The smoothing did not work well for the same reasons why the pitch mapping range worked well. After testing some alternatives I found the best results by smoothing the pitch parameter based on the raw input from the right analog stick scaled relative to where in the pitch parameter range I was. I wanted more smoothing applied when I was at near zero playback speeds and less smoothing when I was at higher playback speeds, so I built in adaptive smoothing to account for this. I accomplished this by taking the delta value from the raw analog stick input and using that to control the ramp time on the line~ object, which is the core of the audio rate smoothing.

The left analog stick controls the buffer position with its x axis and window parameters with its y axis. This left-to-right mapping feels natural/organic as it matches what one would expect in terms of reading an on-screen buffer from left to right. Although this works well, it has an odd resting, or neutral, state. If the analog stick is untouched, both position and window are set to 0.5, which only plays from the middle to the end of the buffer. I’ve experimented with different mapping ideas here, but have not yet found a solution that retains left-to-right controller mapping while allowing the resting state to play the entire buffer.

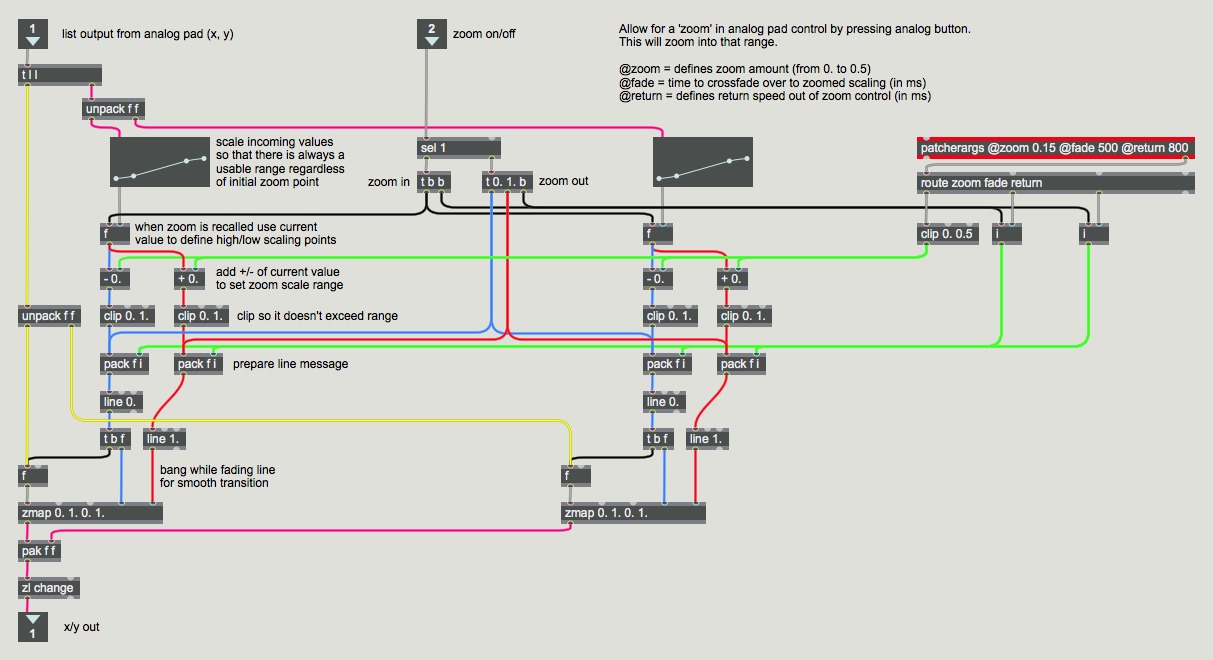

Both analog sticks use the press-to-click buttons underneath the analog sticks to zoom into their respective parameter spaces. This was another one of Sam’s suggestions, which came after a particularly long Halo 4 session. In Halo, when you have a sniper rifle you can press the right analog stick to zoom in to the target, allowing for more precise aim. The way I have implemented this mapping metaphor is by zooming into the parameter range based on where the analog stick is when you initially press it. If the analog stick is in the top right of its range, pressing in to zoom will zoom to that specific parameter range.

[su_pullquote align=”right” class=“”]These morph speeds felt natural and transparent, fitting in with my expected behavior coming over from a video game paradigm, while at the same time sounding good.[/su_pullquote]

Since in Cut Glove the range being zoomed into is finite (0.0 to 1.0), as opposed to the infinite range in Halo (you can spin infinitely), zooming needed to re-center itself in order to work as a mapping metaphor. The way I handle this in Cut Glove is that when one zooms in, the position of the x and y axes at the time of pressing morphs to being the new center of the range over 500ms. Releasing the analog stick, to zoom back out, will morph back to the current live position over 800ms. In my initial mapping one had to return to the middle to grab the zoom function, which allowed for having no audible hand-over take place, but this ended up feeling awkward and confusing in context. In experimenting with the zoom in practice I have found that the current implementation and morph speeds feel and sound natural and transparent, and fit in with my behavior expectations coming over from a video game paradigm.

To allow for maximum flexibility in context, I capped the extreme ends of the range while zooming so that if you zoom in while in a corner (x=1.0, y=1.0), you still end up with a usable range while zoomed in. This means that no matter where you are in the parameter space when you zoom in, you will still have full control over the 15% range you have zoomed in to.

Analog Latch Mode

Given how fluid and gestural the analog control mapping is, it can be difficult to keep a single parameter perfectly still. So if you wanted to play a tiny part of the buffer over and over at a fixed playback speed, it would be near impossible to do so with the analog sticks mapped as they are. This, coupled with the fact that I wanted to be able to focus on other aspects of control in the patch, is why I came up with latch mode. The left bumper is a global latch button, allow you to latch playback, turn effects on/off, and freeze the analog sticks in a certain position. In order to latch one of the analog sticks you need to press the left bumper at the same time as the press-to-click button of the analog stick. This is to avoid mapping confusion with zooming in, or latching an effect while controlling position/window/speed/volume.

While in analog latch mode, you surrender control over one of the two parameters controlled by each analog stick, leaving you only window control on the left analog stick and pitch control on the right. You can alter the parameter by making circles around the perimeter of the analog stick’s range. This allows you to very slowly increase or decrease the assigned parameter as well as leave it in a fixed position.

[su_pullquote align=”right” class=“”]The practical reason for this is to minimize the need to memorize effect state with latched on/off, and the musical reason is to allow for a dynamically playable processing.[/su_pullquote]

The four effect module slots are mapped to the x/y/a/b buttons in a momentary manner, meaning that the effect is only engaged while the button is pressed. A practical reason for this is to minimize the need to memorize the current state of the effect modules, which can be difficult to do when turning effects on and off rapidly. Another more musical reason is that encourages a more dynamically playable processing in general, since each effect can be quickly turned on and off. Being able to dynamically alter the processing in this way is one of the central principles of Cut Glove.

The two bumper buttons act as additional modifiers for the x/y/a/b buttons. If you have an effect engaged and you press the left bumper, you latch the effect on. This allows for the latching of as many effects as happen to be depressed when the left bumper is pressed. The right bumper engages analog latch mode, which allows the left analog stick to control the parameters for whatever effect is currently on, or has its corresponding button pressed. Because the mapping is setup in a manner where an effect is only engaged when its button is released, this mapping allows the control of effect module parameters without turning the effect on by pressing and holding down the corresponding button, and then using the right bumper and and left analog stick before releasing the button. So, for example, if I wanted to control the parameters of the lofi module without turning it on, I could press+hold the corresponding button on the controller (‘a’ by default), then press the right bumper. This would put me into analog latch mode without turning on the effect, allowing me to setup specific parameter settings ahead of time.

Effect Module Mapping

[su_pullquote align=”right” class=“”]In general, each effect module has primary mappings and secondary mappings.[/su_pullquote]

In order to control multiple effect parameters with a single analog stock I came up with a variety of approaches to mapping, depending on the amount and type of parameters each effect had. In general, each effect module has primary mappings and secondary mappings. The primary mappings are based on the x/y axes of the analog stick, by mapping one axis to a single parameter (blend in the pitch effect), and the other to another parameter (shift). The secondary mappings are generally drawn from characteristics like the movement speed of the analog stick, the distance of the stick from the center of its range, or which positional quadrant the stick is in. These secondary mapping parameters were then scaled, smoothed, and mapped onto musically tuned effect parameters.

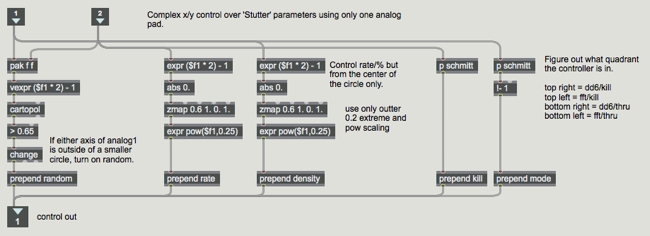

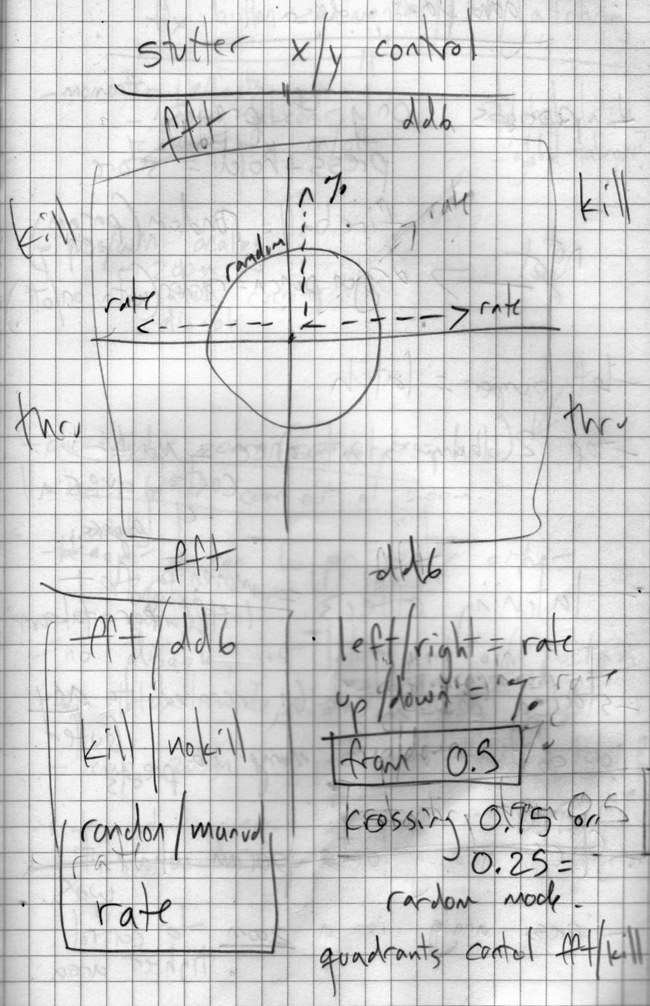

The stutter module has three on/off controls (mode, kill, random), and two 0.0 to 1.0 parameters (rate, density). In order to control these in both a natural and musical way, I came up with the idea of breaking the analog stick’s movement space into quadrants. This allowed me to control the mode (dd-6/fft) and kill parameters by assigning their combination to each of the four quadrants. For the random mode, I used a threshold based on the distance the analog stick was from the center of its range. This meant that using the x/y axes of the analog stick to control rate/density would not be very effective, as the desired mode/kill/random quadrant positioning would constrain their usable range. The solution I came up with was to map the x/y axes starting from the center of the range, so that moving the analog stick left and right would yield the same results. I then applied some scaling to make the control feel and sound natural.

Here is my initial sketch of the mapping:

And here is the final mapping, after some fine-tuning, as implemented in Max:

code for stutter mapping

[su_pullquote align=”right” class=“”]This coupled mapping gives organic control over the perceptual timbre of the sound instead of allowing for discrete control over each individual parameter.[/su_pullquote]

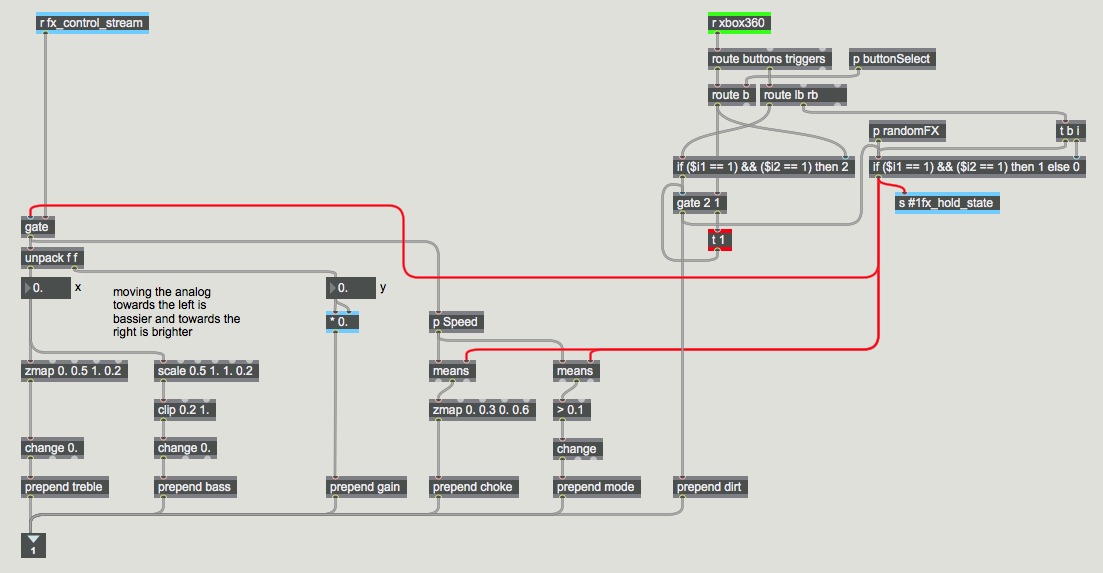

The dirt module takes a different approach since it has four 0.0 to 1.0 parameters and only one on/off one. The y axis of the analog stick is mapped independently to the gain parameter. The x axis is mapped to both the bass and treble parameters, with both parameters set to their maximum (1.0) at the center position of the stick’s range. Moving the analog stick towards the right side of its range makes the sound brighter by reducing the bass, and moving it towards the left side makes the sound darker by reducing the highs. This coupled mapping gives organic control over the perceptual timbre of the sound instead of allowing for discrete control over each individual parameter. The movement speed of the analog stick is mapped, as a secondary mapping, to both the choke parameter and the mode (hard/soft). The faster the analog stick is moved, the higher the choke parameter goes, and if the speed exceeds an assigned threshold, the mode is switched from soft to hard.

Preset/D-pad Mapping

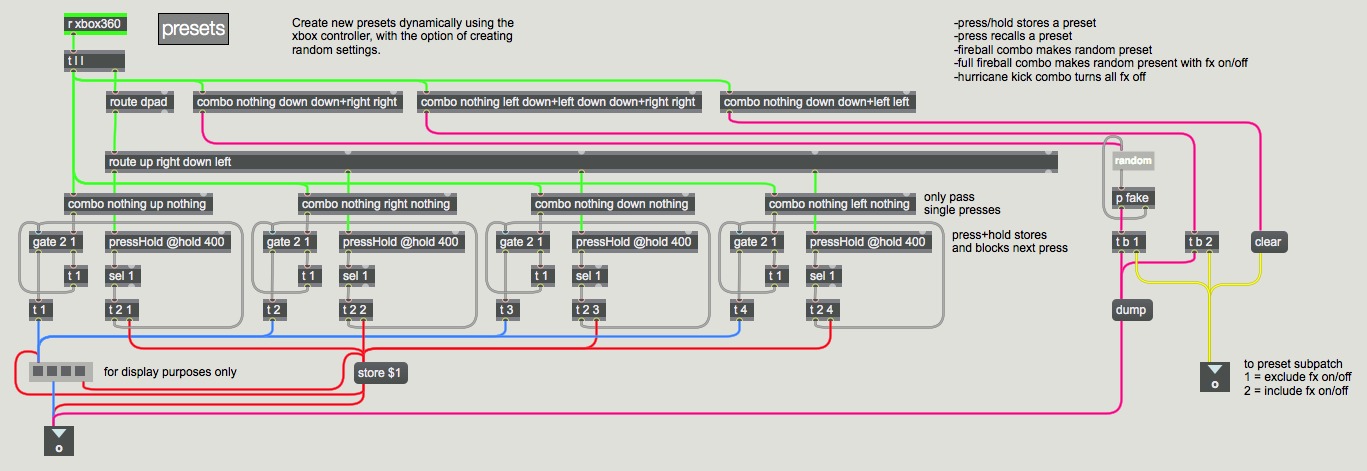

[su_pullquote align=”right” class=“”]In order to be able to control presets with the four buttons of the D-pad, I implemented video game special attacks from Street Fighter.[/su_pullquote]

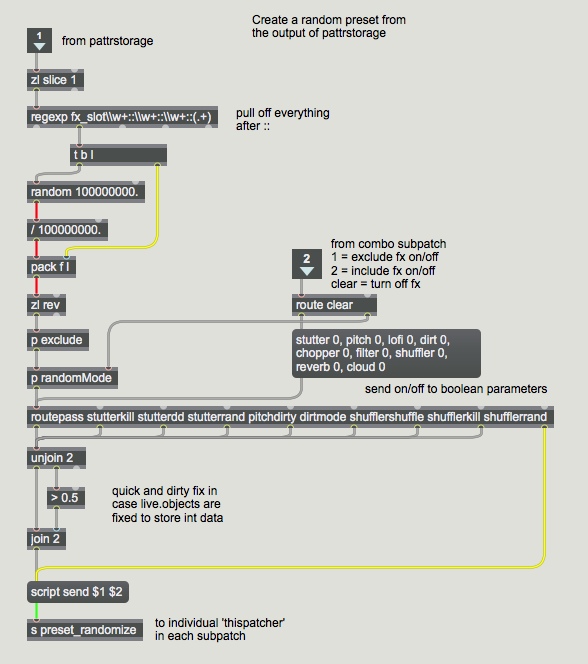

It is possible to dynamically store and recall presets of the four effect modules with the D-pad. Each direction on the pad (up/down/left/right) represents one of the four available preset slots, which can be written over endlessly. To store a preset you press and hold any direction (up/down/left/right) for 400ms; and to recall the preset you simply press that direction again. I also wanted to be able to randomize parameters and turn on/off effects with the four buttons of the D-pad, so I implemented this functionality by using Street Fighter special attacks.

Hadouken (Fireball) special attack. Image from Street Fighter II.

Street Fighter’s Hadouken (or Fireball) special attack forms the basis of the secondary mappings on the D-pad. Executing a fireball combo (down, down+right, right) generates a random preset without turning on/off any effect. Executing a wider fireball combo (left, left+down, down, down+right, right) generates a random preset that allows effects to be turned on/off. Finally, a reverse fireball combo (down, down+left, left) turns all effects off. This final all off combo was needed since it was impossible to tell, without looking, what effects were on at any given time while generating randomized presets.

Since I wanted to be able to create a randomized preset of an unknown amount of effect parameters without having to individually add them to a list, I used pattrstorage’s ‘dump’ message coupled with regexp to be able to tell what parameters were available for randomization. (This section of the patch was more elegant before I switched to using live.objects since live.objects show up as float types to pattrstorage regardless of their actual (float or int) type).

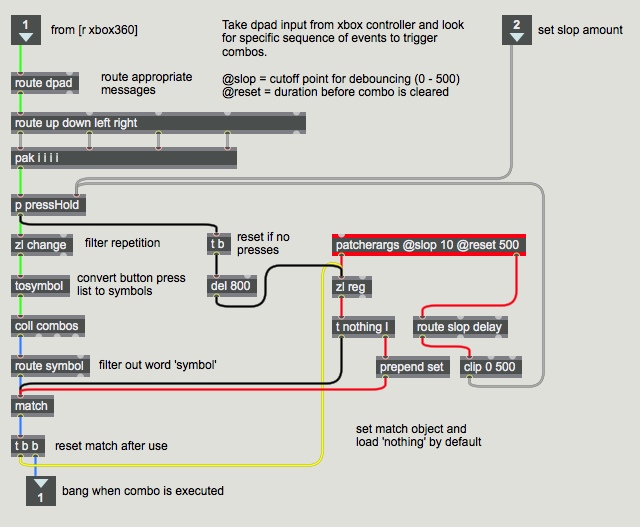

In order to handle the creation of special attacks, or combos, in Max, I came up with an abstraction that allows me to type out the combo I want to check for in the D-pad’s control stream. It includes a slop parameter that eliminates (accidental) presses shorter than 10ms. The combo matching also times out at 800ms, so if a combo is abandoned halfway through its execution, it is not triggered by mistake when making unrelated moves later on.

This abstraction also came in handy for differentiating individual preset store/recall functions from attempted combos by checking for a nothing press before and after any given preset command. This nothing press also serves to differentiate the two types of random fireball combos, as both combos end with the same exact sequence of events.

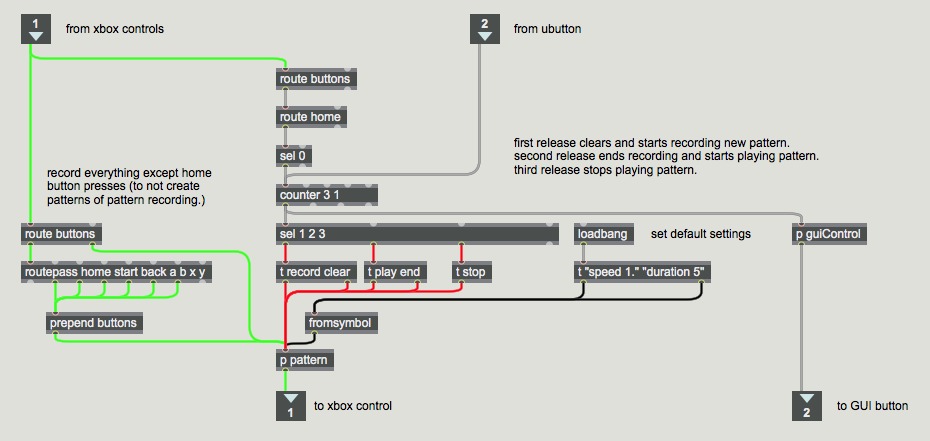

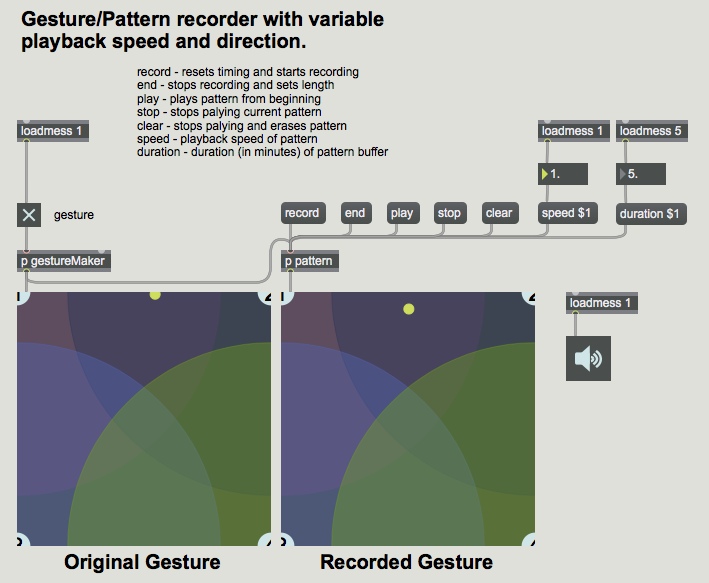

Gesture/Pattern Recorder Mapping

Finally, a gesture/pattern recorder is implemented in Cut Glove that can record any input from the Xbox controller. The gesture/pattern recorded is mapped to the home button in the center of the controller. In order to control the various functions of the gesture/pattern recorder (record/play/stop) using only one button, I created a mapping that starts recording a pattern when the home button is first pressed. A second press immediately begins playback of that pattern, and a third press stops the pattern. This simple mapping fits in with the DL-4-style transport control paradigm where starting to record a new gesture/loop erases the previous one.

[su_pullquote align=”right” class=“”]This recontextualization of gestural input is one of the most exciting aspects of the gesture/pattern recorder.[/su_pullquote]

The gesture/pattern recorder only records the actual input from the Xbox controller. This means that playing back the same series of events (physical button presses and analog stick wiggles) will not produce the same sonic outcome as it is only the control events that are being replayed, and not the audio itself. Take, for example, a sequence of events where a pattern is recorded in which an audio loop is created, then played back, and finally overdubbed onto. When that sequence of events is played back, the first recorded event, which previously started recording a new loop, will now instead come out of overdub mode, because of how the looper’s state machine is setup. Even in a simpler example where the recorded pattern consists only of creating a loop and then playing it back, a completely different sonic outcome will be created each time the pattern is replayed, as new audio will be recorded each time. Additionally, any manipulation of the analog sticks, bumper modifiers, or effect button presses are all recorded and played back with the gesture. This recontextualization of gestural input is one of the most exciting and promising aspects of the gesture/pattern recorder.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

DSP (the guts)

karma~ Cores

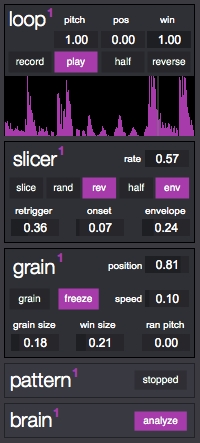

The core of Cut Glove is made up by four identical parallel karma cores based around the karma~ external, which was built specifically for this project. Each one of these karma cores is made up of a looper, a buffer slicer, a granular synth, a gesture/pattern recorder, and an audio-analysis section.

a single ‘karma core’

[su_pullquote align=”right” class=“”]I wanted all of these modules to feel like one single unit, even though they are completely different under the hood.[/su_pullquote]

The loop, slicer, and grain modules are transparently intertwined so that the controls in the loop module (pitch, pos/win, half, reverse) affect all of the other modules, and all of the modules can be used at the same time. This desire for a deep integration came from enjoying how the pitch (master clock) in my 8-bit hardware sampler, WTPA, would affect all other sampler functions. I implemented this idea in a manner whereby adjusting the pitch parameter on the loop module will affect the rate of the slicer module as well as the pitch of the grain module. Adjusting the position/window parameters will, similarly, limit the slicer/grain modules to that buffer region for jumping around and/or granulation. I wanted all of these modules to feel like one single unit, even though they are completely different under the hood.

At the center of each karma core is the custom external, karma~. karma~ is a dynamic length, varispeed looper with some complex and quirky features. Central to Cut Glove is the ability to dynamically control position/window and record/playback speed. In karma~ the play/record head automatically jumps whenever it touches the position/window boundaries, allowing for dynamic and playable control using the left analog stick. Additionally, karma~ allows for varispeed recording and playback, using interpolation to eliminate any missing or overwritten samples. This gives you similarly expressive control with the right analog stick. Cut Glove makes extensive use of these features to allow fluid and gestural control of these parameters. You can click here to read more about karma~ and what it can do.

[su_pullquote align=”right” class=“”]The slicer module allows you to jump around the buffer at a fixed or random rate, with the option of having each slice that is played back to be randomly reversed and/or half-speed.[/su_pullquote]

The slicer module is an upgraded version of the module of the same name in The Party Van. The slicer module allows you to jump around the buffer at a fixed or random rate, with the option of having each slice that is played back to be randomly reversed and/or half-speed. You can also choose for each slice to have an envelope applied to it, based on a percentage of the duration of that slice. The retrigger and onset parameters effect which slices are available for the random selection process. The retrigger parameter gives you control over the probability that the same slice will be triggered again. The onset parameter uses an adaptation of rm.slice to segment the contents of the buffer into individual slices based on attacks, similar to how drum replacement works in most DAWs.

Gesture/Pattern Recorder

The gesture/pattern recorder in Cut Glove is an audio-rate pattern recorder loosely based on the core of mesh, a monome app. It allows for dynamic length, overdubbing, and varispeed playback, similar to the karma modules discussed above. I wanted to be able to treat the recorded gestures and patterns like I would the contents of a buffer; so being able to change their playback speed (including reversed playback) was essential. The ability to overdub onto and vary pattern playback is not yet implemented in Cut Glove, but the functionality is already built in, and it will be used in an upcoming version.

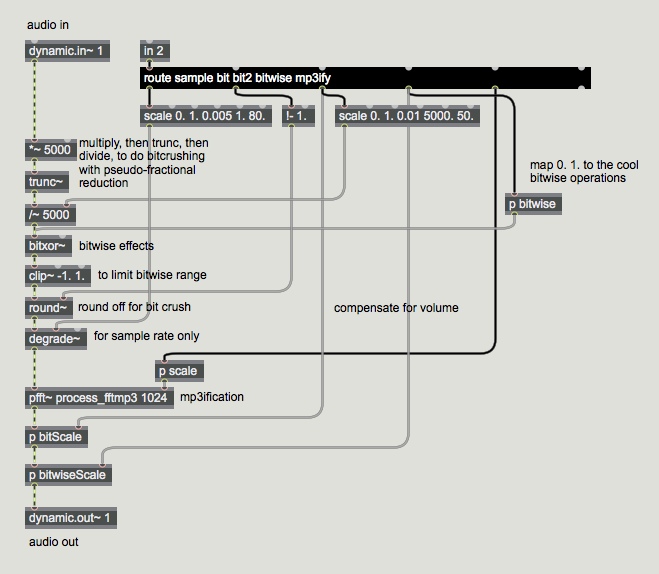

Effect Module DSP

[su_pullquote align=”right” class=“”]The lofi module uses a variety of approaches to creating sample/bit-rate reduced sounds, including bitwise manipulations and psychoacoustic compression.[/su_pullquote]

The lofi module uses a variety of approaches to creating sample/bit-rate reduced sounds. In addition to these you can apply bitwise manipulations and psychoacoustic compression to further degrade the sounds in less conventional ways. There are two approaches to controlling bit-depth, both of which allow continuous, as opposed to stepped, control over the bit-reduction. The first technique uses multiply, truncate, and divide to discard adjustable amounts of bit-depth resolution (reducing a number like 0.948384243 to 0.948). For bitwise manipulations bitxor~ is used to create extreme and splattery bit-crush type sounds. The psychoacoustic/mp3 compression is achieved with a custom external (framkerank~ by Alex Harker) which sorts the amplitudes of a single frame in order (descending) and then outputs the ranking which will be 0 for the loudest and N/2(-1) for the quietest (where N is the FFT Size). This combination of effects, and their signal processing order, creates a complex sounding and interactive effect.

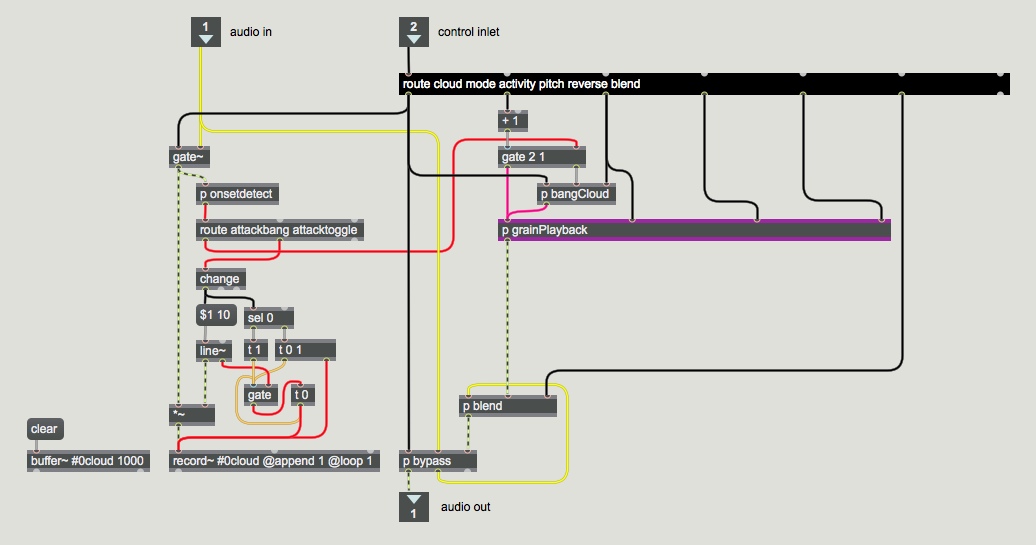

[su_pullquote align=”right” class=“”]Cloud can produce a range of sounds that clump or coalesce around the attacks in your incoming audio stream.[/su_pullquote]

In Cut Glove I developed a new effect that I have called cloud. Cloud analyzes incoming audio and every time an attack or onset is detected, it records a section of the incoming audio to a rolling buffer and plays back a randomly selected section from the same buffer. The rolling buffer keeps the last 1000ms of recorded fragments and is constantly being erased and renewed with new material. The playback section has two modes, chunk and grain. In chunk mode, each time there is an attack, a single segment from the buffer is played back. Whereas in grain mode, a random amount of segments are played back for each detected attack. There are three parameters for controlling the playback of segments: reverse, pitch, and activity. Reverse allows for some of the segments to be played back reversed and pitch controls the amount of variation in the playback speed. Activity is more complex and controls multiple parameters internally, including the amount of segments to play back and the rate at which to play them back. With these capacities cloud can produce a range of sounds that clump or coalesce around the attacks in the incoming audio.

Effect/DSP Chain

One of the initial limitations I wanted to impose on the system was to have only four effect modules, one for each of the four buttons (x/y/a/b). This makes sense physically and structurally in the patch, but can be limiting if you wanted to use more than the initial starting effects. I developed a method to be able to swap out effect modules for each of the four slots, including the ability to use the same effect multiple times. This is done by loading, and then swapping out, a dummy effect slot. This restructuring of the code allows for an expandable set of effect modules that can be loaded as desired. This is a change from the approach I originally took in The Party Van, which had eight fixed effect modules.

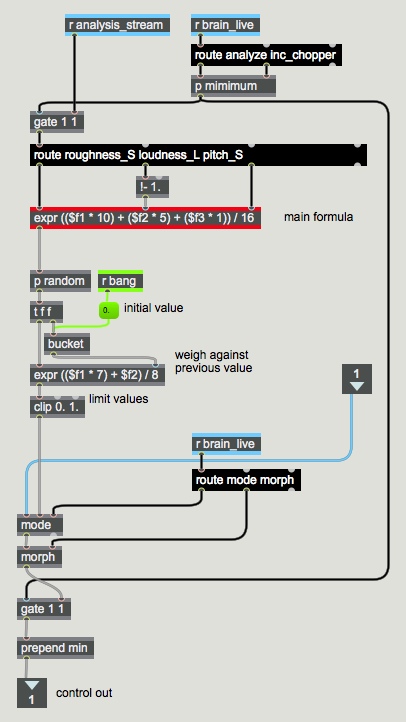

Audio Analysis

[su_pullquote align=”right” class=“”]The idea behind using audio analysis in this way comes from a desire to have a complex and detailed sonic output from minimal user input.[/su_pullquote]

Along with the audio analysis in the cloud effect, there are also real-time and off-line (buffer) analyses happening in the patch via the brain modules. The brain modules generate analysis-based presets for each of the effect modules (real-time analysis) and the karma modules (off-line analysis). The idea behind using audio analysis in this way comes from a desire to have complex and detailed sonic output from minimal user input, something that was central to the design of The Party Van. This was implemented in The Party Van originally for practical reasons, as I would often be using it in conjunction with another instrument and didn’t want to micromanage individual parameters. In Cut Glove the idea is to generate musically interesting presets based on the input so that I only need to go into analog takeover for “broad strokes” adjustments. Click here to view a demo video of presets generated by audio analysis.

The karma modules use off-line buffer analysis to determine presets for the slicer and grain modules based on algorithms I tuned by ear when initially developing The Party Van. The analysis algorithms in the effect modules are unique to each parameter and tested with a variety of sound sources. Here is the analysis algorithm for the min parameter of the chopper effect.

In this section of code I weigh together the roughness, loudness, and pitch descriptors of the incoming audio. This is then modified by a small variation that is randomized each time the patch is loaded, and then weighted against the previous analysis value. Each individual parameter is mapped in a similar manner, producing a dynamic foundation on top of which further manipulations and control can be applied.

Throughout the following performance I make use of audio analysis-based effect and playback presets to allow me to focus on the instrumental and interaction aspects of the improvisation.

///////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

The Future

[su_pullquote align=”right” class=“”]In engaging this mode you will be able to scrub backwards through time by replaying, in reverse, the sequence of physical movements you made on the controller.[/su_pullquote]

I have many changes, updates, and improvements in mind for Cut Glove v02. The first large-scale addition will be the implementation of game-specific metaphors, starting with some of the Braid gameplay mechanisms. I have two main ideas for how to implement some of the time manipulations from Braid in Cut Glove. In Braid you can rewind time at any point in the game. I want to implement a similar ability in the existing gesture/pattern recorder. In engaging this mode you will be able to scrub backwards through time, by replaying, in reverse, the sequence of physical movements you made on the controller. As outlined in the mapping section, playing back a sequence of events in a different order can create consequences that were never present in the original sequence of events. This gesture time space will be varispeed scrubbable, allowing for faster or slower than normal movement through that gesture stream. An extension of this, which is not possible in Braid, is the ability to scrub into a future that hasn’t happened yet. I will implement a predictive machine learning algorithm (via ml.lib) to allow scrubbing into a predicted future based on what your user input up to that point has been. This would be transparently incorporated into the scrubbing mechanism, presenting the predictive machine learning algorithm as part of the metaphor itself.

[su_pullquote align=”right” class=“”]This would allow for the gestural playback of gestural material. A kind of meta-gestural layer.[/su_pullquote]

A second Braid metaphor I want to implement comes from the ability to create shadow versions of yourself in World 5 of Braid like in the video below. I will implement this in a manner whereby you can create out of time gestures and then deploy them while you are executing a separate complementary gesture. Expanding on this would be the ability to create multidimensional/complex gestures by layering multiple out of time gestures, recorded at variable rates, and then deploying them all at the same time while also at a variable rate. This would allow for the gestural playback of gestural material, a kind of meta-gestural layer.

Feedback, as discussed earlier in this blog post, is another issue for future development. I would like to expand the functionality of Max’s hi object to allow bidirectional communication with human interface devices. [If you are a Max external developer and would be interested in taking this on as a commission, please get in touch.] One thing I have learned from using and developing software for monome devices is the power of decoupled LED feedback. The Xbox 360 controller has 4 LEDs in the form of a ring around the home button. These four LEDs could easily illuminate to show which of the four karma modules are being controlled. Additionally, a variety of LED spins, flashes, and X patterns could signal secondary functions, such as record/playback, or an effect being engaged. It may even be possible to use Pulse-width modulation to control the brightness of the LEDs by very quickly turning them on and off to create the illusion of a dimmer LED. All of these functions could, with only four LEDs, serve to give complex user feedback on patch state and functionality.

[su_pullquote align=”right” class=“”]Haptic feedback in a software instrument can create a finer degree of control and expressive output by giving a physical feel to software processes.[/su_pullquote]

Access to the controller’s rumble motors would allow for haptic feedback, which if used in conjunction with the LEDs could form a robust feedback system not based on a screen of any kind. Lauren Hayes outlines in a paper the importance of haptic feedback in software instruments in creating a finer degree of control and expressive output by giving a physical feel to software processes. In the same vein, this article on the Apple Watch goes into detail about the degree of differentiation and specificity that can be derived from different types, intensities, and durations of vibration feedback, particularly when coupled with additional sonic or visual feedback. If a human interface driver were developed for the Xbox One controller, which has independent rumble motors in each trigger, updating Cut Glove to incorporate this would allow for complex, nuanced, and rich haptic feedback.

imagine from apple.com

The combo and special attack mechanisms described earlier in this post will get further refined, and packed into a higher order control level, perhaps in the form of a scripting language. At the moment, all of the mapping in Cut Glove is done manually, which is tedious when I want to make big changes. So abstracting as much of the code and control as possible will let me create and manipulate mappings in a quicker manner. Perhaps even using something like libmapper to handle the actual mapping between components.

The present handling in Cut Clove will get completely refactored. At the moment I am using pattrstorage to store and recall presets, and while this is easy to implement with autopattr objects, this method is slow, nearly to the point of being useless. Recalling a preset can sometimes take over a second, which makes for a horrible feeling of latency in performance. The answer to this will be to build a custom buffer-based storage system to write and recall presets from, allowing for instantaneous preset loading.

[su_pullquote align=”right” class=“”]I am currently considering how I could remap the effect buttons to allow for this kind of dynamic signal routing and processing.[/su_pullquote]

In addition to the quicker storing and recalling of presets, I will allow for the dynamic loading of effects as part of the preset storage system, so that a preset can be a discrete signal path along with its associated parameter settings. This will allow for a more robust audio processing signal path with many possibilities for expansion. I am currently considering how I could implement something similar to Sam Pluta‘s navigable effect matrices. The D-pad and x/y/a/b buttons would be completely remapped to allow for this kind of dynamic signal routing and processing.

from Sam Pluta’s thesis showing signal routing

I have been slowly working on this patch for over a year, and it has been in a performable state since the start of 2015. In that time I’ve had chances to rehearse and reflect on its usage. One thing that stood out to me early on is that when sampling and playback are two separate activities, there can be a sonic down time associated with the act of sampling. Lawrence Casserly discussed this in an interview I saw where he spoke about his delay-based approach to live sampling. I never felt this when using The Party Van as I would be playing a physical instrument, and would switch between the two, so I never had unintended downtime. An important step in dealing with this in Cut Glove was the introduction of the cloud effect explained above. Since it automatically samples and plays back audio, I can turn the effect on and go hunting for audio I want to sample without creating sonic, or mental, downtime.

[su_pullquote align=”right” class=“”]Trusting the machine is the point at which I adjust myself, for the desired results, instead of the code.[/su_pullquote]

The development cycle itself has been rewarding, especially from the time I got the patch in a performable state. In developing several patches and incorporating them in my performance setup I have become familiar with the cycle of trusting the machine. This is the name I have given the phenomenon, in a development cycle, of realizing that something not sounding or acting the way I want is now up to me to resolve as a performer rather than programmer. It’s the point at which I adjust myself for the desired results instead of the code. I am now at that point. I trust Cut Glove.

I would like to thank Alex Harker, Sam Andreae, Pierre Alexandre Tremblay, Braxton Sherouse, raja, and Angela Guyton for their time, help, advice, suggestions, support, and knowledge in building this monster of a fucking patch. Thank you!

Click here to download Cut Glove(v01), the required externals, and instruction manual.